SimWorld: A World Simulator for

SimWorld: A World Simulator for

Scaling Photorealistic Multi-Agent Interactions

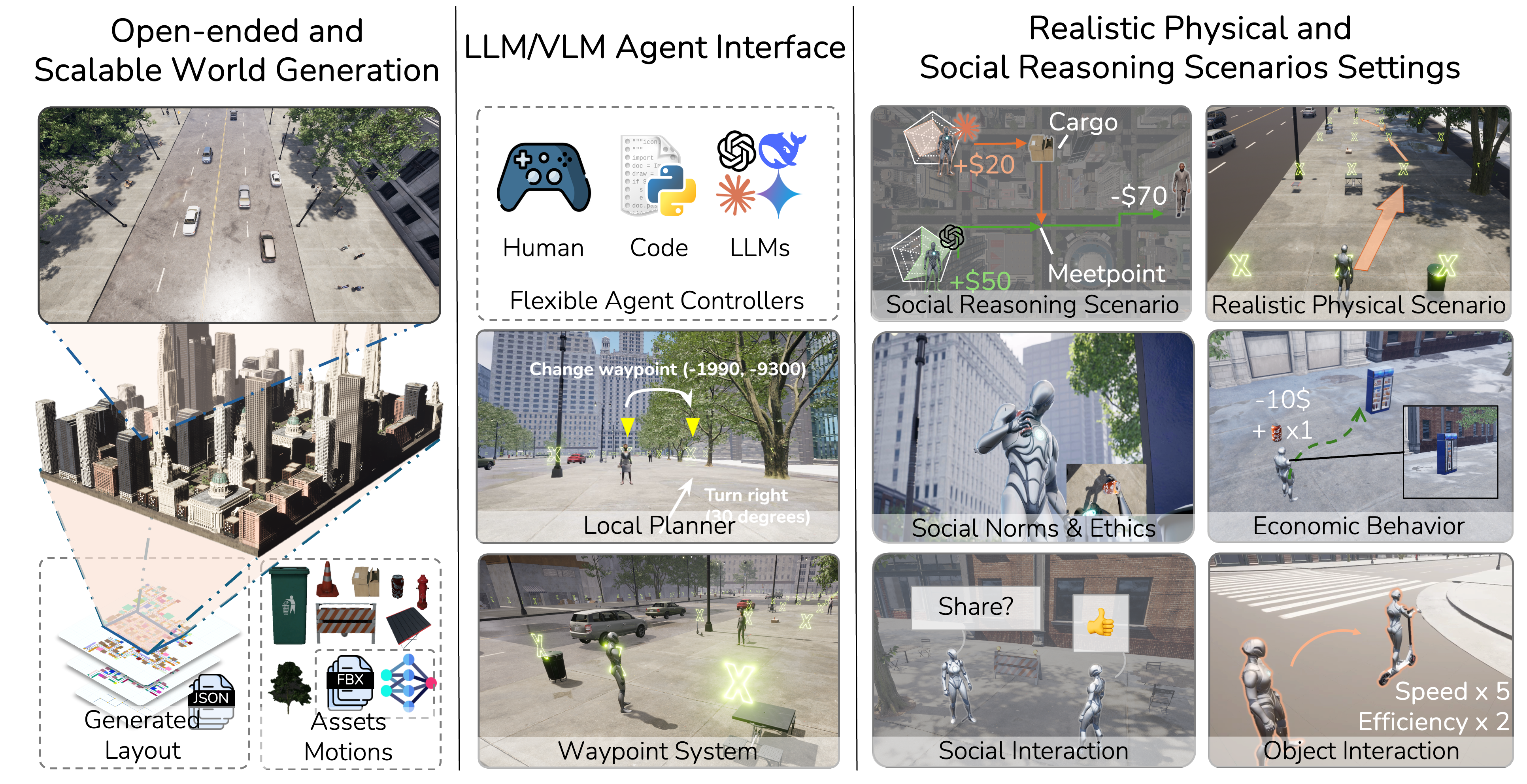

We introduce SimWorld, a new simulator built on Unreal Engine 5, designed for developing and evaluating LLM/VLM agents in rich, real-world-like settings. SimWorld offers three core capabilities:

- Realistic, open-ended world simulation, including accurate physical and social dynamics and language-driven procedural environment generation

- Rich interface for LLM/VLM agents, with multi-modal world inputs/feedback and open-vocabulary action outputs at varying levels of abstraction

- Diverse physical and social reasoning scenarios that are easily customizable by users

Large language models (LLMs) excel in structured domains like math and coding, but struggle in the noisy, dynamic conditions of the real world, where agents must navigate complex environments and social interactions. Existing simulators—whether game-like, domain-specific, or socially focused—lack realism, open-endedness, or natural language interfaces, limiting their applicability to LLM-based agents. To address these gaps, we introduce SimWorld: a platform for developing and evaluating LLM and VLM agents in rich, dynamic, and interactive settings.

Existing embodied simulators typically focus on indoor scenes. There have been urban simulators, but they either lack realism or are limited to autonomous driving. Critically, most of them do not allow users to flexibly generate new scenes or define new embodied AI tasks. In contrast, SimWorld provides a user-friendly Python API and diverse 3D assets that enable users to procedurally generate realistic and dynamic city-scale environments to support various Embodied AI research tasks. Our simulator can also be connected with large language models (LLMs) to drive the behavior of different types of agents (humans, vehicles, and robots) in the environments.

| Simulator | Open-ended / Procedural | Open-ended / Lang-Ctrl | Physical / Social Realism | Action / Abstraction | Action / Open-Vocab | Agent Type | Physics Engine |

|---|---|---|---|---|---|---|---|

| Minedojo | ✅ | ❌ | ⭐️ | Low-level | ❌ | Humanoid | Minecraft |

| Mindcraft | ✅ | ❌ | ⭐️ | High-level | ❌ | Humanoid | Minecraft |

| MetaUrban | ✅ | ❌ | ⭐️⭐️ | Low-level | ❌ | Vehicle | PyBullet |

| EmbodiedCity | ❌ | ❌ | ⭐️⭐️⭐️ | Low-level | ❌ | Drone/Vehicle | Unreal Engine |

| CARLA | ❌ | ❌ | ⭐️⭐️⭐️ | Low-level | ❌ | Vehicle | UE / Unity |

| GRUtopia | ❌ | ❌ | ⭐️⭐️ | Low-level | ❌ | Humanoid/Robot | Isaac Sim |

| OmniGibson | ❌ | ❌ | ⭐️⭐️ | High-/Low-level | ❌ | Robot | Omniverse |

| AI2-THOR | ✅ | ❌ | ⭐️⭐️ | Low-level | ❌ | Robot | Unity |

| Habitat 3.0 | ❌ | ❌ | ⭐️⭐️ | Low-level | ❌ | Humanoid/Robot | Bullet |

| SimWorld | ✅ | ✅ | ⭐️⭐️⭐️ | High-/Low-level | ✅ | Humanoid/Robot/Vehicle | Unreal Engine |

Infinite World Generation

SimWorld generates infinite worlds with diverse shapes, scales, and distributions using procedural generation. Users can easily control and edit the world layout through both code and a user-friendly UI. The generated layouts serve as a foundation for sampling corresponding assets in Unreal Engine, creating interactive and immersive scenes.

Various and Dynamic Simulated Scenes

SimWorld supports various and dynamic simulated scenes. Based on the procedural generation, we developed a set of tools to help users to easily control the scene layout and the properties of the simulated scenes, including the building types, the road network, the roadside objects, the vegetation, etc.

SimWorld also supports control environmental on fly, users can change the weather, the time of day, the properties of the object and building during the runtime of the simulation by using the Python API.

Traffic System

SimWorld features a dynamic traffic system that simulates real-world traffic flow and pedestrian behavior to make the virtual environments more immersive and interactive. Users can control traffic lights, speed limits, and pedestrian movements. The traffic agents are powered by a custom-designed system, which supports both rule-based and LLM-driven control mechanisms.

Various Agent Actions

SimWorld supports various agent actions, including navigation, social behavior, object manipulation, and interaction with the environment. Users can also use the Python API to control the agent’s actions.

Social Reasoning Simulation

SimWorld supports various social reasoning scenarios, including social interaction and reasoning about the social norms. For example, users can define the social reasoning tasks by using the Python API, SimWorld can generate the corresponding social reasoning scenarios, give the social and physical context to the agents, which can be used to evaluate the social reasoning capabilities of the agents.

Physical Reasoning Simulation

Embodied reasoning and physical interaction can also be simulated in SimWorld. In this task, agents are required to reach a designated goal while circumventing both static (e.g., trees, benches) and dynamic (e.g., pedestrians) obstacles. This necessitates multimodal perception and real-time decision-making under uncertainties and unexpected events.

SimWorld Team

Yan Zhuang*, Jiawei Ren*, Xiaokang Ye*

Xuhong He, Zijun Gao, Ryan Wu, Mrinaal Dogra, Cassie Zhang, Kai Kim, Bertt Wolfinger

Ziqiao Ma, Tianmin Shu†, Zhiting Hu†, Lianhui Qin†

Maitrix.org

UCSD

JHU

1

2

3

4

5

6

@misc{simworld,

title={SimWorld: A World Simulator for Scaling Photorealistic Multi-Agent Interactions},

author={Zhuang*, Yan and Ren*, Jiawei and Ye*, Xiaokang and He, Xuhong and Gao, Zijun and Wu, Ryan and Dogra, Mrinaal and Zhang, Cassie and Kim, Kai and Wolfinger, Bertt and Ma, Ziqiao and Shu$^{\dagger}$, Tianmin and Hu$^{\dagger}$, Zhiting and Qin$^{\dagger}$, Lianhui},

booktitle={Demonstration Track, IEEE/CVF conference on computer vision and pattern recognition (CVPR)},

year={2025}

}